What Data Visualization Should Do: Simple Small Truth

Yesterday the good folks at IA Ventures asked me to lead off the discussion of data visualization at their Big Data Conference. I was rather misplaced among the high-profile venture capitalists and technologist in the room, but I welcome any opportunity to wax philosophically about the power and danger of conveying information visually.

I began my talk by referencing the infamous Afghanistan war PowerPoint slide because I believe it is a great example of spectacularly bad visualization, and how good intentions can lead to disastrous result. As it turns out, the war in Afghanistan is actually very complicated. Therefore, by attempting to represent that complex problem in its entirety much more is lost than gained. Sticking with that theme, yesterday I focused on three key things—I think—data visualization should do:

- Make complex things simple

- Extract small information from large data

- Present truth, do not deceive

The emphasis is added to highlight the goal of all data visualization; to present an audience with simple small truth about whatever the data are measuring. To explore these ideas further, I provided a few examples.

As the Afghanistan war slide illustrates, networks are often the most poorly visualized data. This is frequently because those visualizing network data think it is a good idea to include all nodes and edges in the visualization. This, however, is not making a complex thing simple—rather—this is making a complex thing ugly.

Below is an example of exactly this problem. On the left is a relatively small network (V: ~2,220 and E:~4,400) with weighted edges. I have used edge thickness to illustrate weights, and used a basic force-directed algorithm in Gephi to position the nodes. This is a network hairball, and while it is possible to observe some structural properties in this example, many more subtle aspects of the data are lost in the mess.

On the right are the same data, but I have used information contained in the data to simplify the visualization. First, I performed a k-core analysis to remove all pendants and pendant chains in the data; an extremely useful technique I have mentioned several times before. Next, I used the weighted in-degree of each node as a color scale for the edges, i.e., the dark the blue the higher the in-degree of the node the edges connect to. Then, I simply dropped the nodes from the visualization entirely. Finally, I added a threshold weight for the edges so that any edges below the threshold are drawn with the lightest blue scale. Using these simple techniques the community structures are much more apparent; and more importantly, the means by which those communities are related are easily identified (note the single central node connecting nearly all communities).

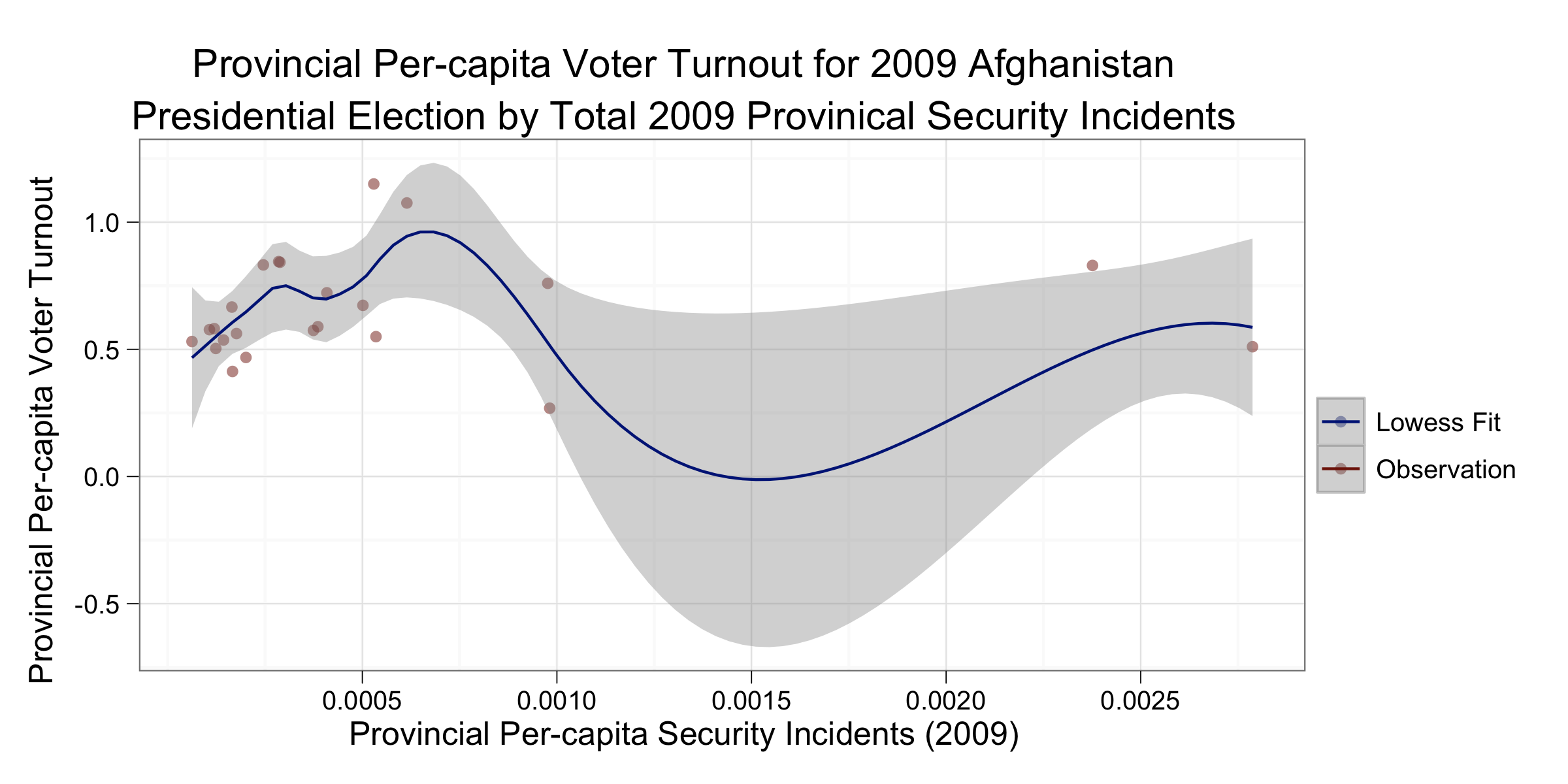

To discuss the importance of extracting small information from large data I used the visualization of the WikiLeaks Afghanistan War Diaries that I worked on this past summer. The original visualization is on the left, and while many people found it useful, its primary weakness is the inability to distinguish among the various attack types represented on the map. It is clear that activity gradually increased in specific areas over time; however, it is entirely unclear what activity was driving that evolution. A better approach is to focus on one attack type and attempt glean information from that single dimension.

On the right I have extracted only the 'Explosive Hazard' data from the full set and visualized as before. Now, it is easy to see that the technology of IEDs were a primary force in the war, and as has been observed before, the main highway in Afghanistan significantly restricted the operations of forces.

Finally, to show the danger of data deception I replicated a chart published at the Monkey Cage a few months ago on the sagging job market for political science professors. On the left is my version of the original chart published at the Monkey Cage. At first glance, the decline in available assistant professorships over time is quite alarming. The steep slope conveys a message of general collapse in the job market. This, however, is not representative of the truth.

Note that in the visualization on the left the y-axis scales go from 450 to 700, which happen to be the limits of the y-axis data. Many data visualization tools, including ggplot2 which is used here, will scale their axes by the data limits by default. Often this is desirable; hence the default behavior, but in this case it is conveying a dishonest perspective on the job market decline. As you can see from the visualization on the right, by scaling the y-axis from zero the decline is much less dramatic, though still relatively troubling for those of us who will be going on the job market in the not distant future.

These ideas are very straightforward, which is why I think they are so important to consider when doing your own visualizations. Thanks again to IA Ventures for providing me a small soap box in front of such a formidable crowd yesterday. As always, I welcome any comments or criticisms.

Cross-posted at dataists